Machine learning is the process of using an algorithm to learn from past data and generalize it in order to make predictions about future data.

When fitting a machine learning algorithm, we minimize error, cost, or loss because of function optimization. A predictive modeling project includes optimization for machine learning during data prepping, hyperparameter tuning, and model selection.

During this stage, a machine learning algorithm creates a parameterized mapping function (e.g. a weighted sum of inputs) and an optimization algorithm is used to fund the values of the parameters (e.g. model coefficients) that minimize the error of the function when used to map inputs to outputs. Keep reading and learn more.

Table of Contents

What Is Function Optimization?

A function’s maximum or minimum value is found through function optimization. Any structure is acceptable as long as the function generates numerical values.

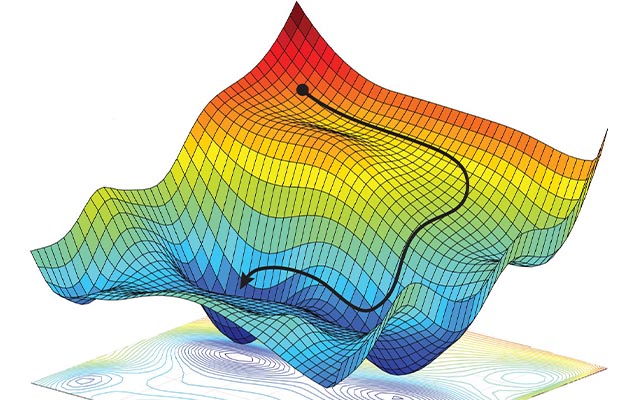

How can we determine the maximum or minimum of a function if it is a black box? To determine which input will result in the best output, we can examine every conceivable option. Alternatively, we can assume that the function will behave a certain way, such that the output is continuous with respect to the input, and take advantage of this by, for instance, using a hill-climbing algorithm. There are many optimization algorithms, and each one was developed using a set of heuristics or assumptions. The best one might not be appropriate for another task.

Machine Learning And Optimization

The challenge of function optimization is to identify the set of inputs to a target objective function that produces the function’s minimum or maximum value.

Due to the function’s unknown structure, which is frequently non-differentiable and noisy, and its potential for having tens, hundreds, thousands, or even millions of inputs, it can be a difficult problem.

- Function Optimization: Find the set of inputs that causes an objective function to be at its minimum or maximum.

Function approximation can be used to describe machine learning. In order to make predictions about future data, it is necessary to approximate the unidentified underlying function that converts examples of inputs to outputs.

It can be difficult because there are frequently few examples from which we can extrapolate the function, and the structure of the extrapolated function is frequently nonlinear, noisy, and might even contain contradictions.

- Function Approximation: Generate a reusable mapping function from specific examples to make predictions about fresh examples.

More often than not, function optimization is easier than function approximation.

It’s significant to note that function optimization is frequently used in machine learning to address the issue of function approximation.

An optimization algorithm is the fundamental building block of almost all machine learning algorithms.

In addition, the process of working through a predictive modeling problem involves optimization at multiple steps in addition to learning a model, including:

- choosing a model’s hyperparameters.

- Choosing the transforms to apply to the data prior to modeling

- Deciding on a modeling process to use for the finished model.

Now that we are aware of the crucial role that optimization plays in machine learning, it is time to examine some examples of learning algorithms and how they employ optimization.

Optimization Used In A Machine Learning Project

In addition to fitting the learning algorithm to the training dataset, optimization plays a significant role in a machine-learning project.

The process of cleaning the data before fitting a model and the process of fine-tuning a selected model can both be framed as optimization problems. In fact, a whole predictive modeling project can be viewed as a massive optimization problem.

Let’s examine each of these instances in more detail one at a time.

Data Preparation As Optimization

Data preparation entails putting raw data in a format that the learning algorithms will find most useful.

Scaling values, dealing with missing values, and altering the probability distribution of variables may all be involved.

In order to satisfy the expectations or requirements of particular learning algorithms, transforms can be used to change the representation of the historical data. However, when the expectations are broken or when a transformation that has nothing to do with the data is carried out, sometimes the best or best results can be obtained.

Choosing which transforms to use on the training data can be viewed as a search or optimization problem aimed at revealing the data’s unidentified underlying structure to the learning algorithm in the most effective way.

- Data Preparation: Sequences of transforms, which are optimization problems, are the function inputs. This calls for an iterative global search algorithm.

Manual trial and error is a common method used to solve this optimization problem. Nevertheless, it is feasible to automate this process using a global optimization algorithm, where the inputs to the function are the kinds and sequences of transforms applied to the training data.

It may be possible to conduct an exhaustive search or a grid search of frequently used sequences because the number and permutations of data transforms are typically quite constrained.

Hyperparameter Tuning As Optimization

In order to adapt a machine learning algorithm to a particular dataset, it has hyperparameters that can be set.

Many hyperparameters’ dynamics are well understood, but it is unknown exactly what impact they will have on how well the resulting model performs given a particular dataset. In light of this, it is common practice to test a range of values for important algorithm hyperparameters for a particular machine learning algorithm.

Hyperparameter optimization or tuning is what this is.

A naive optimization algorithm, like a random search algorithm or a grid search algorithm, is frequently used for this purpose.

- Hyperparameter Tuning: Algorithm hyperparameters are function inputs; these optimization issues call for an iterative global search algorithm.

The use of an iterative global search algorithm for this optimization issue is nevertheless becoming more widespread. A Bayesian optimization algorithm that has the ability to simultaneously optimize and approximate the target function (using a surrogate function) is a popular option.

As evaluating a single set of model hyperparameters requires fitting the model to the entire training dataset once or multiple times, depending on the model evaluation procedure chosen (e.g. repeated k-fold cross-validation).

Model Selection As Optimization

The process of selecting a machine learning model from a large pool of potential models is known as model selection.

Choosing the machine learning algorithm or pipeline that creates a model is really what it comes down to. The final model is then trained using this, and it can be applied in the desired application to make predictions based on fresh data.

A machine learning practitioner will frequently perform this model selection manually, which includes steps like data preparation, candidate model evaluation, model tuning, and model selection.

This could be seen as an optimization issue encompassing all or a portion of the predictive modeling project.

- Model Selection: Data transformation, the machine learning algorithm, and the algorithm hyperparameters are the function inputs; the optimization problem calls for an iterative global search algorithm.

This is increasingly true as automated machine learning (AutoML) algorithms are used to select an algorithm, an algorithm and hyperparameters, or data preparation, algorithm, and hyperparameters with little to no user involvement.

Given that each function evaluation is expensive, it is typical to use a global search algorithm that also roughly approximates the objective function, such as Bayesian optimization.

Modern machine learning as a service (MLaaS) products offered by organizations like Google, Microsoft, and Amazon are also based on this automated optimization approach to machine learning.

Even though they are quick and effective, hand-crafted models created by highly skilled professionals, such as those taking part in machine learning competitions, still outperform these approaches.

Read More: Regularization In Machine Learning