Wondering about GPU machine learning? We define a GPU and discuss why machine learning is a good application for its processing power.

GPUs are the technology that consistently succeeds in powering cutting-edge ML research and many practical ML applications in the ever-expanding landscape of ML accelerators. As a result, understanding it is crucial for ML practitioners.

To learn more, keep reading!

Table of Contents

What is Machine Learning?

Machine Learning is an important area of research and engineering that studies how algorithms can learn to perform specific tasks at the same level or better than humans.

The focus here is on learning and how computers can pick up new skills while operating in various environments and with a variety of inputs. A branch of the larger field of artificial intelligence, machine learning has been around for many years.

Research and development in the fields of artificial intelligence and machine learning have a long history in academia, large corporations, and popular culture. However, the widespread adoption of intelligent machines and efficient learning faced a challenge for much of the 1960s through the 1990s.

Expert systems, natural language processing, and robotics are examples of specialized applications that use learning techniques in one way or another, but outside of these fields, machine learning seemed to be a niche field of study.

What is a GPU?

Graphics processing units, or GPUs, are specialized processing units designed to accelerate graphics rendering for gaming. GPUs have been successfully used in applications outside of their intended scope because of their special ability to parallelize massive distributed computational processes effectively.

It is not surprising that ML applications are among the most successful given the growth of big data and big deep learning models.

While it is common practice to tune GPU families to particular applications in order to maximize their performance, all GPUs share the same fundamental physical components. A GPU is a printed circuit board, similar to a motherboard, with a processor for computation and a BIOS for setting storage and diagnostics.

Regarding memory, you can distinguish between dedicated GPUs, which are independent of the CPU and have their own vRAM, and integrated GPUs, which are located on the same die as the CPU and use system RAM. On specialized GPUs, machine learning works best.

Read More: SAP Machine Learning

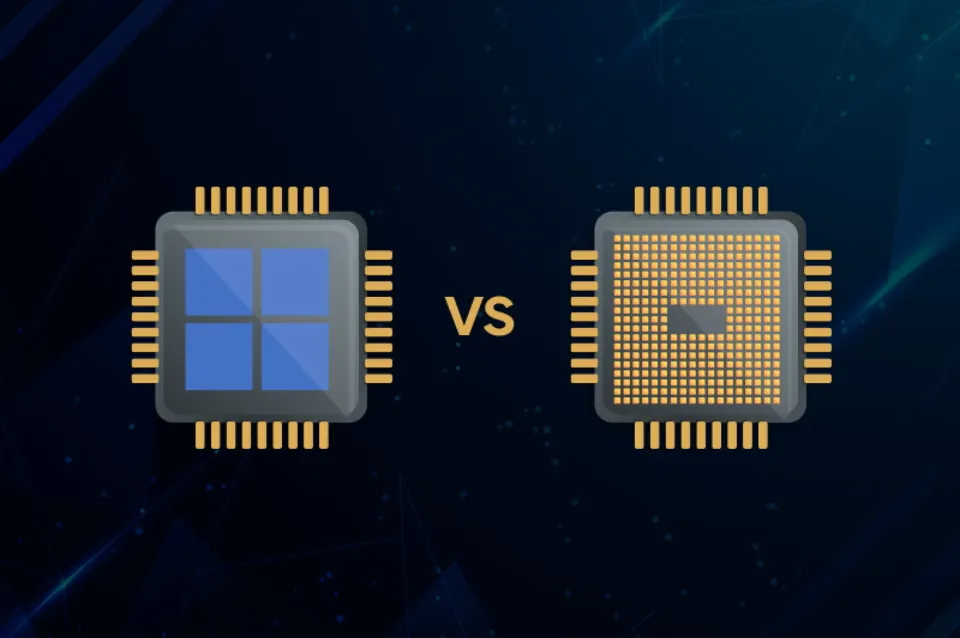

Why Use a GPU Vs. a CPU for Machine Learning?

The ostensibly obvious hardware setup would include faster, more potent CPUs to support the high-performance requirements of a contemporary AI or machine-learning workload.

Modern CPUs aren’t always the best tool for the job, as many machine-learning engineers are learning. For this reason, they are using graphics processing units (GPUs).

On the surface, a GPU’s advantage over a CPU’s is that it can process high-resolution video games and movies more effectively. But it quickly becomes clear that their differences are more marked when it comes to managing particular workloads.

Both CPUs and GPUs work in fundamentally different ways:

- A CPU handles the majority of the processing tasks for a computer. They are quick and adaptable because of this. Specifically, CPUs are built to handle any number of required tasks that a typical computer might perform: accessing hard drive storage, logging inputs, moving data from cache to memory, and so on. In order to support the more generalized operations of a workstation or even a supercomputer, CPUs must be able to switch between multiple tasks quickly.

- A GPU is designed from the ground up to render high-resolution images and graphics almost exclusively—a job that doesn’t require a lot of context switching. Instead, GPUs emphasize concurrency, or breaking down complex tasks (such as identical computations used to create effects for lighting, shading, and textures) into smaller subtasks that can be carried out concurrently.

It takes more than just more power to support parallel computing. While Moore’s Law, which predicts a doubling of CPU power every two years, (theoretically) shapes CPUs, GPUs get around this by tailoring hardware and computing configurations to a particular problem.

This approach to parallel computing, known as Single Instruction, Multiple Data (SIMD) architecture, allows engineers to distribute tasks and workloads with the same operations efficiently across GPU cores.

Therefore, why are GPUs appropriate for machine learning? Because the fundamental requirement of machine learning is the input of larger continuous data sets in order to enhance and improve the capabilities of an algorithm. The more data there is, the more effectively these algorithms can learn from it.

Parallel computing can support complex, multi-step processes, which is especially true for deep learning algorithms and neural networks.

What Should You Look for in a GPU?

There are a number of consumer- and enterprise-grade GPUs available on the market as a result of GPU technology becoming such a sought-after product not only for the machine-learning industry but for computing in general.

Generally speaking, if you are looking for a GPU that can fit into a machine-learning hardware configuration, then some of the more important specifications for that unit will include the following:

- High memory bandwidth: Because they process data in parallel, GPUs have a high memory bandwidth. A GPU can access a lot of data from memory at once, in contrast to a CPU that operates sequentially (and imitates parallelism through context switching). Depending on your job, a higher VRAM and higher bandwidth combination is usually preferable.

- Tensor cores: Throughput and latency can be increased and decreased by using tensor cores, which facilitate faster matrix multiplication in the core. Tensor cores are not present in every GPU, but as technology develops, they are becoming more prevalent, even in GPUs designed for consumers.

- More significant shared memory: By making data more accessible, GPUs with higher L1 caches can accelerate data processing, but doing so is expensive. Although generally speaking, higher cache density GPUs are better, there is a trade-off between price and performance, especially if you buy GPUs in large quantities.)

- Interconnection: For high-performance workloads, a cloud or on-premises solution using GPUs will typically have several units connected to one another. However, not all GPUs get along with one another, so be aware that the best strategy is to make sure they can coexist peacefully.

It’s important to note that GPU buying isn’t something that large-scale operations typically do unless they have their own dedicated processing cloud. Instead, businesses using high-performance computing will buy cloud (public, hybrid, or otherwise) space designed for machine-learning workloads. High-performance GPUs and quick memory will be included in these cloud providers’ platforms, in an ideal world.

Bottom Line: GPU Machine Learning

GPUs are optimized for training artificial intelligence and deep learning models as they can process multiple computations simultaneously.

The market has seen a variety of accelerators emerge over the past five years, including Google’s TPUs, which are highly specialized on particular machine learning (ML) applications.

Read More:

FAQs

What is the Advantage of GPU in Machine Learning?

As they can process multiple computations at once, GPUs are ideal for training artificial intelligence and deep learning models.

Why is GPU Used for Deep Learning?

Because the core of machine learning is the need for input of larger continuous data sets to enhance and improve the capabilities of an algorithm.

Is GPU Necessary for Machine Learning?

For machine learning, a good GPU is essential.