Currently, a lot of teams begin by manually labeling their datasets, but more are turning to time-saving techniques to partially automate the process, like active learning.

Active learning is a branch of machine learning where a learning algorithm can interact with a user to query data and label it with the desired outputs.

Since data is constantly becoming more affordable to collect and store, there is an increasing problem in machine learning with the amount of unlabeled data. This results in more data being available to data scientists than they can handle. Active learning enters the picture here.

Table of Contents

What Is Active Learning?

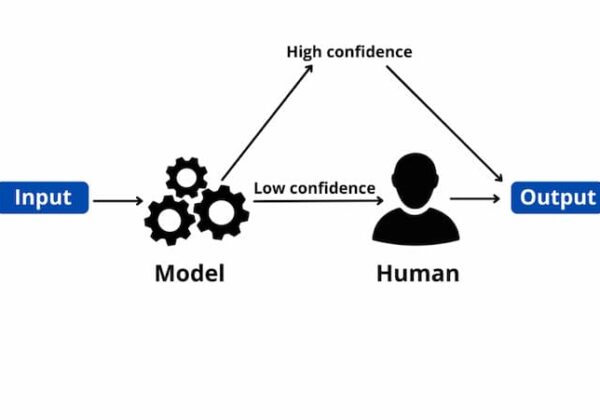

In semi-supervised machine learning, active learning allows the algorithm to pick the data it wants to learn from. This method allows the program to actively query an authority source, such as the programmer or a labeled dataset, to discover the accurate prediction for a particular problem.

This iterative learning approach aims to expedite learning, especially if you lack a significant labeled dataset to apply conventional supervised learning techniques.

In the field of labeling-intensive Natural Language Processing, active learning is one of the most widely used techniques. With less human involvement, this technique can achieve outcomes comparable to those of supervised learning.

When Is Active Learning Beneficial?

Active learning has been particularly helpful for natural language processing because building NLP models requires training datasets that have been tagged to indicate parts of speech, named entities, etc. It can be difficult to find datasets that have this tagging and have a sufficient number of distinct data points.

Medical imaging and other situations where there is a limited amount of data that a human annotation can label as necessary to aid the algorithm have benefited from active learning. Although the process can occasionally be slow because the model needs to be constantly adjusted and retrained based on incremental labeling updates, it can still be faster than using traditional data collection techniques.

How Does Active Learning Function?

Multiple circumstances call for the use of active learning. In general, the choice of whether or not to query a given label depends on whether the benefit of doing so outweighs the expense of acquiring the information. Based on the data scientist’s budgetary constraints and other variables, this decision-making may actually take a number of different forms.

The following are the three types of active learning:

Pool-based Sampling

The most well-known active learning scenario is this one. In this sampling technique, the algorithm makes an effort to assess the entire dataset before choosing the best query or set of queries. It is common practice to train the active learner algorithm on a fully labeled portion of the data, which is then used to decide which instances would be most useful to add to the training set for the following active learning loop. The method’s drawback is the potential memory usage.

Membership Query Synthesis

Due to the creation of artificial data, this scenario is not applicable in all situations. In this approach, the active learner is free to develop their own examples for labeling. When creating a data instance is simple, this approach works well.

Stream-based Selective Sampling

In this case, the algorithm evaluates whether it would be advantageous to query for the label of a particular unlabeled entry in the dataset. The model is given a data instance while it is being trained and decides right away whether it wants to query the label. The lack of assurance that the data scientist will stay within budget presents a natural drawback to this strategy.

Steps For Active Learning

When labeling data points and improving an approach, there are a variety of methods that have been studied in the literature. Nevertheless, we will only cover the most typical and basic approaches.

Using active learning on an unlabeled data set involves the following steps:

- The first thing that must be done is to manually label a very small portion of this data.

- The model must be trained on some small amount of labeled data first. The model won’t be perfect, of course, but it will give us some insight into which parts of the parameter space should be labelled first to make it better.

- Following training, the model is used to forecast the class for each subsequent unlabeled data point.

- On the basis of the model’s prediction, a score is assigned to each unlabeled data point. We will outline some of the most popular possible scores in the following subsection.

- This procedure can be repeated iteratively once the best method for labeling priorities has been determined: a new model can be trained on a new labelled data set, which has been labelled based on the priority score. After the new model has been trained on the subset of data, it can be used to update the prioritization scores and continue labeling by processing the unlabeled data points. In this way, as the models improve, the labeling strategy can be continually improved.

What Is The Use Of Active Learning?

Three main strategies can be used to implement active learning:

- A stream-based selective sampling approach, in which remaining data points are assessed one-by-one, and every time the algorithm identifies a sufficiently beneficial data point it requests a label for it. It may take a lot of labor to implement this method.

- A pool-based sampling approach, in which the entire dataset (or some fraction of it) is evaluated first so the algorithm can see which data points will be most beneficial for model development. While more labor- and memory-intensive, this method is more effective than stream-based selective sampling.

- A membership query synthesis approach, where the algorithm essentially generates its own hypothetical data points. This method only works in specific circumstances where gathering precise data points is reasonable.

Active learning is one of the most exciting topics in data science today. RapidMiner Studio, which enables you to experiment with creating your own machine learning models, will get you from data to insights in a matter of minutes if you’re interested in data science but aren’t sure where to begin.

Difference Between Active Learning And Reinforcement Learning

Active learning and reinforcement learning both help models require fewer labels, but they are two different ideas.

Reinforcement Learning

It is possible to incorporate inputs from the environment when using reinforcement learning, a goal-oriented method inspired by behavioral psychology. This implies that the agent will improve and pick up new information as it is used. This is comparable to how we humans improve upon our errors. In essence, we are using a reinforcement learning strategy. There is no training phase because the agent instead learns by doing, with the help of a predetermined reward system that gives feedback on how effective a particular action was. Because it creates its own data as it goes, this kind of learning does not require input from outside sources.

Active Learning

Compared to conventional supervised learning, active learning is more similar. It is a form of semi-supervised learning, where models are trained using both labeled and unlabeled data. The concept behind semi-supervised learning is that labeling a small sample of data may produce results that are as accurate as or even more accurate than training data that has been fully labeled. Finding that sample is the only difficult part. In active learning machine learning, data is incrementally and dynamically labeled during training so that the algorithm can determine which label would be most helpful for it to learn from.