When it comes to Bayesian Machine Learning, you likely either love it or prefer to stay at a safe distance from anything Bayesian.

Based on Bayes’ Theorem, Bayesian ML is a paradigm for creating statistical models. However, many renowned research organizations have been developing Bayesian machine-learning tools for decades. And they still do.

Learn more about Bayesian machine learning by reading the rest of this article.

Table of Contents

What is Bayesian Machine Learning?

Bayesian ML is a paradigm for constructing statistical models based on Bayes’ Theorem

p(θ|x)=p(x|θ)p(θ)p(x)

As a general rule, the objective of Bayesian machine learning (ML) is to estimate the posterior distribution (p(|x)p(θ|x)) given the likelihood (p(x|)p(x|&theta);) and the prior distribution (p()p(&theta);). From the training set of data, the likelihood can be calculated.

In reality, when we train a typical machine learning model, we do just that. Maximum Likelihood Estimation is a process that iteratively updates the model’s parameters in an effort to increase the likelihood that training data xx will be observed after having already seen the model’s parameters, or θ.

So how is the Bayesian paradigm different? The situation is reversed in that we actually aim to maximize the posterior distribution in this case, which fixes the training data and calculates the probability of any parameter setting θ given the data. We call this process Maximum a Posteriori (MAP). However, it is simpler to consider it in terms of the likelihood function.

Read More: Machine Learning in Marketing

Methods of Bayesian ML

Here are two methods of Bayesian ML.

Map

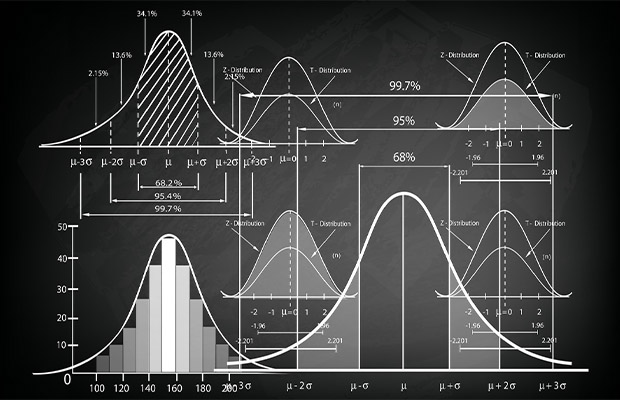

While MAP is the first step towards fully Bayesian machine learning, it’s still only computing what statisticians call a point estimate, that is the estimate for the value of a parameter at a single point, calculated from data. Point estimates have the drawback that they only reveal a parameter’s optimal setting, which is a limited amount of information. In reality, we frequently seek additional details, such as our level of confidence that a parameter’s value should fall within this predetermined range.

The computation of the entire posterior distribution is where Bayesian ML’s true power lies, in this regard. However, this is a complex matter. Distributions are not neatly packaged, programmable mathematical objects. They are frequently described to us as challenging, infeasible integrals over continuous parameter spaces that cannot be calculated analytically. Therefore, a number of fascinating Bayesian methods have been devised that can be used to sample (i.e. draw sample values) from the posterior distribution.

MCMC

Probably the most famous of these is an algorithm called Markov Chain Monte Carlo, an umbrella that contains a number of subsidiary methods such as Gibbs and Slice Sampling. The MCMC math is challenging but fascinating. In essence, these techniques operate by building a known Markov chain that settles into a distribution that is equivalent to the posterior. By utilizing gradient data to help the sampler more effectively navigate the parameter space, a number of successor algorithms enhance the MCMC methodology.

A more comprehensive Bayesian model frequently uses MCMC and its relatives as a computational cog. One of their drawbacks is that they are frequently very computationally inefficient, though this issue has greatly improved recently. Nevertheless, whenever possible, it’s always best to use the simplest tool available.

To that end, there are numerous easier techniques that frequently work. For example, there exist Bayesian linear and logistic regression equivalents in which something called the Laplace Approximation is used. By computing a second-order Taylor expansion centered at the MAP estimate and revolving around the log-posterior, this algorithm generates an analytical approximation of the posterior distribution.

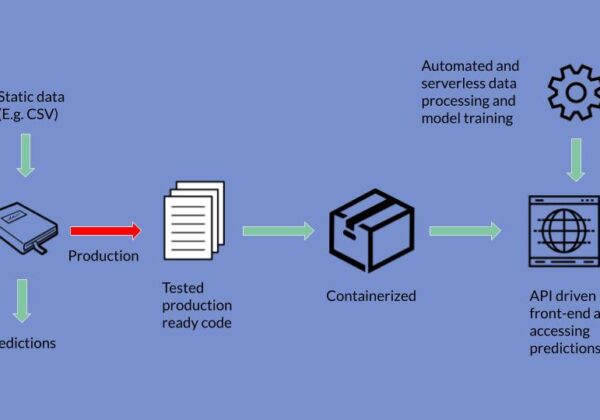

The Presence of Bayesian Models in ML

Although Bayesian models are not as common in industry as their alternatives, they are starting to see a comeback as a result of the recent development of computationally tractable sampling algorithms, easier access to CPU/GPU processing power, and their diffusion outside of academia.

They are particularly helpful in low-data domains where deep learning approaches frequently fail and in environments where the capacity to reason about a model is crucial. They are particularly widely used in bioinformatics and healthcare, where taking a point estimate at face value can frequently have disastrous results and deeper understanding of the underlying model is required.

For instance, without at least having some understanding of how the model was working, one would not want to blindly trust the outcomes of an MRI cancer prediction model. Similar to this, Bayesian techniques are heavily utilized by variant callers in bioinformatics, including Mutect2 and Strelka.

These software programs take in DNA reads from a person’s genome and label “variant” alleles which differ from those in the reference. Despite the complexity they add to implementation, Bayesian methods make a lot of sense in this field where accuracy and statistical soundness are crucial.

Overall, Bayesian machine learning (ML) is a rapidly expanding subfield of machine learning, and it is expected to continue to grow in the years to come as new computer hardware and statistical techniques are incorporated into the canon.

Conclusion on Bayesian Machine Learning

I hope this article has enlightened you a little bit about the value of Bayesian machine learning. It is undoubtedly not a panacea, and there are many situations in which it may not be the best option. But if you carefully balance the benefits and drawbacks, Bayesian methods can be a very helpful tool.

In addition, I would be open to a more in-depth discussion on the subject, either in the comments or over private channels.

You May Also Like:

FAQs

Is a Bayesian Model a Machine Learning Model?

Unlike deep learning or regression models, Bayesian inference is a machine learning model that isn’t as popular.

What is Bayesian Theory in AI?

The Bayes Theorem is a technique for calculating conditional probabilities, or the likelihood of an event occurring given the occurrence of another event. A conditional probability can help produce results that are more accurate because it includes more conditions, or more data.