Although machine learning is now viewed as the panacea for all issues, it is not always the solution.

Despite being very helpful for many projects, ML isn’t always the best choice. In some circumstances, ML implementation is unnecessary, makes no sense, and might even make things worse. There are some limitations of machine learning that we cannot avoid currently.

In this article, I want to persuade the reader that there are situations in which machine learning is the best course of action and situations in which it is not.

Table of Contents

Why Should We Care About Machine Learning?

The sensors in smart devices, search engines, e-commerce platforms, social media, and other platforms are constantly exploding with a ton of potentially valuable customer data. It might contain information on user behavior, demographics, past purchases, and other inconsequential facts about how they use the Internet. Massive amounts of this data, also known as big data, are produced. Often, it is so unstructured that more than just an incredibly powerful computer is required to process it. Their automated big data processing techniques make excellent use of machine learning techniques.

The development of software or process algorithms is what the “ML algorithm” is all about—it’s not some kind of magic that causes complicated things to happen. In particular, these algorithms are created with the capacity to learn from statistical data analysis, obviating the need for laborious manual programming. How does this work?

To produce predicted outputs (the so-called training data), the input data that you pass through the computing model is processed and statistically analyzed. The entire processing phase is automated by machine learning, along with the creation of computing models and the acquisition of results. The fact that it builds future scenarios based on the most likely outcomes after learning from previous experiences is more significant.

Limitations Of Machine Learning

Limitation 1: Ethical Concerns

Of course, trusting algorithms have many benefits. Using computer algorithms to automate procedures, analyze vast amounts of data, and make difficult decisions has been advantageous to humanity. It is not without disadvantages, though, to trust algorithms. Every stage of algorithm development is susceptible to bias. Furthermore, bias cannot really be eliminated because algorithms are created and trained by people.

Still unresolved are many ethical issues. When something goes wrong, for instance, who is to blame? Take the most obvious case in point: self-driving cars. In the event of a traffic accident, who should be held responsible? Who is responsible for driving: the car company, the software creator, or the driver?

One thing is certain: ML is unable to make morally challenging decisions on its own. In the not-too-distant future, we will need to develop a framework to address ethical issues with ML technology.

Limitation 2: Deterministic Problems

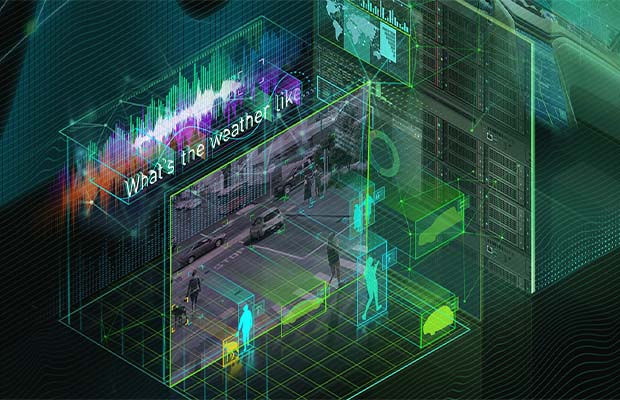

Weather forecasting, as well as studies on the climate and the atmosphere, are among the many applications of ML, a potent technology. Sensors that measure environmental indicators like temperature, pressure, and humidity can be adjusted by using ML models to calibrate and correct them.

For instance, models can be programmed to simulate weather and simulate atmospheric emissions to forecast pollution. This can be computationally demanding and take up to a month, depending on the quantity of data and the complexity of the model.

Can ML be used to predict the weather by people? Maybe. Experts can use information from weather stations and satellites in addition to a basic forecasting algorithm. They can supply the information needed to train a neural network to forecast the weather for the next day, such as air pressure in a particular area, air humidity, wind speed, etc.

Neural networks, however, are unable to comprehend the physics or laws governing a weather system. For instance, while ML is capable of making predictions, calculations in intermediate fields like density may yield results that are contravening the fundamental principles of physics. Cause-and-effect relationships are not recognized by AI. Although the neural network can connect input and output data, it cannot determine why they are related.

Limitation 3: Data

The most obvious limitation is this one. If you feed a model poorly, then it will only give you poor results. This may show up in one of two ways: lack of data, and lack of good data.

Lack of Data

Before they can start to produce useful results, many machine learning algorithms need a lot of data. Neural networks are an effective illustration of this. Considering how much data-hungry neural networks are, training data must be plentiful. The larger the architecture, the more data is needed to produce viable results. Although data augmentation and reuse of data are both undesirable options, having more data is always the better choice.

Use the data if you can get it.

Lack of Good Data

This is not the same as the comment above, despite appearances. Let’s say you believe you can trick your neural network by creating 10,000 fictitious data points to feed it. What happens when you insert it?

When you test it on an unknown data set after it has trained on its own, it won’t perform well. Although you had the data, its quality was subpar.

Similar to how a deficiency in features can make your algorithm perform poorly, a deficiency in ground truth data can restrict the potential of your model. No business will use a machine learning model that performs worse than human error.

Similar to this, using a model that was developed using a particular set of data in one situation may not always work as well in another. I’ve found that breast cancer prediction is the best illustration of this so far.

There are many images in mammography databases, but one issue that has recently caused serious problems is that nearly all of the x-rays are from white women. Although it may not seem significant, black women have been shown to have a 42 percent higher risk of dying from breast cancer due to a variety of factors, some of which may include variations in detection and access to healthcare. In this case, training an algorithm primarily on white women has a negative effect on black women.

What is needed in this specific case is a larger number of x-rays of black patients in the training database, more features relevant to the cause of this 42 percent increased likelihood, and for the algorithm to be more equitable by stratifying the dataset along the relevant axes

Limitation 4: Misapplication

Related to the second limitation discussed previously, there is purported to be a “crisis of machine learning in academic research” whereby people blindly use machine learning to try and analyze systems that are either deterministic or stochastic in nature.

Machine learning will work on deterministic systems for the reasons mentioned in limitation 2, but the algorithm won’t learn the relationship between the two variables and won’t be able to tell when it is defying physical laws. We merely provided the system with some inputs and outputs and instructed it to learn the relationship; like someone who is translating word for word from a dictionary, the algorithm will only seem to have a cursory understanding of the underlying physics.

Things are a bit more ambiguous in stochastic (random) systems. There are two main manifestations of the machine learning crisis for random systems:

- P-hacking

- Scope of the analysis

P-hacking

When one has access to large data, which may have hundreds, thousands, or even millions of variables, it is not too difficult to find a statistically significant result (given that the level of statistical significance needed for most scientific research is p < 0.05). As a result, it is common to discover erroneous correlations through the process known as p-hacking, which involves searching through mountains of data until a correlation with statistically significant results is discovered. These aren’t actual correlations; rather, they’re just a result of the measurements’ noise.

Due to this, some people have started “fishing” for statistically significant correlations in large data sets and passing these off as real correlations. Sometimes this is a simple error (in which case the scientist should receive better training), but other times it is done to boost a researcher’s publication count because even in academia, competition is fierce and people will do anything to boost their metrics.

Scope of the Analysis

Statistical modeling is inherently confirmatory, whereas machine learning is inherently exploratory, so there are inherent differences in the scope of the analysis between the two.

We can think of confirmatory analysis and models as the kind of work one would complete for a Ph.D. program or in a research field. Consider yourself trying to create a theoretical framework with a consultant to analyze a system in the real world. You can conduct tests to ascertain the viability of your hypotheses after carefully planning experiments and developing a set of pre-defined features that this system is influenced by.

Conversely, the exploratory lacks a number of the characteristics of the confirmatory analysis. In fact, the sheer volume of data causes the confirmatory approaches to completely fail when dealing with truly massive amounts of data and information. In other words, in the presence of hundreds, much fewer thousands, much fewer millions of features, it is simply not possible to carefully lay out a finite set of testable hypotheses.

Therefore, and once more generally, machine learning algorithms and approaches are best suited for exploratory predictive modeling and classification with large amounts of data and computationally complex features. Some will argue that they can be applied to “small” data, but why would someone do that when traditional multivariate statistical methods are so much more insightful?

ML is a field that primarily deals with issues related to computer science, information technology, and other related fields. These issues can be either theoretical or practical. As a result, it is connected to disciplines like physics, mathematics, probability, and statistics, but ML is really a field unto itself, one that is free of the issues brought up in the other disciplines. While many of the solutions developed by ML practitioners and experts are painfully incorrect, they do the job.

Limitation 5: Interpretability

The interpretability of machine learning is one of its main issues. If a company that only employs traditional statistical techniques does not believe the model to be interpretable, an AI consultancy firm’s pitch to them will be immediately rejected. How likely is it that your client will trust you and your knowledge if you can’t persuade them that you know how the algorithm made its decision?

If these models cannot be understood by humans, they lose their usefulness as models. Human interpretation is governed by rules that go far beyond technical skill. For this reason, if machine learning techniques are to be used in practice, interpretability is a crucial quality to pursue.

Machine learning researchers have focused primarily on the burgeoning -omics sciences (genomics, proteomics, metabolomics, and the like) because of their reliance on large, complex databases. Despite their apparent success, they struggle because their methods are not interpretable.

Opportunities That Machine Learning Offered

Let’s first consider the opportunities for machine learning before learning its limitations. Big data’s untapped potential is revealed by artificial intelligence. Such computing algorithms will make customer segmentation simpler, produce more accurate targeting strategies, and control potential risks if a business has a large customer base. You can complete difficult business tasks with improved analysis techniques and faster processing: it provides automation and gives a way to cost-efficiency.

Data Mining Automation

The digital era produces and piles ups tons of data at such high speed that manual processing of information along with its interpretation is becoming less and less possible for us humans. Data mining is a method that combines database analysis with data exploration to produce information that can be used to inform decisions. The data mining process can be automated using machine learning techniques. Its information creates reliable hypotheses and predictions.

Data-rich Task Automation

In machine learning, tasks such as data generation and analysis are carried out by autonomous computer systems that don’t require ongoing manual reprogramming. It enables your employees and you as a business owner to focus your efforts on other corporate objectives and projects.

This automation function allows Google’s search engine to index websites and Apple’s Siri to respond to user inquiries. Task automation benefits businesses by enabling better service delivery, higher-quality products, and creative business expansion strategies. The development of algorithms for face recognition in biometric authentication, medical diagnostics, and fraud detection and prevention in banks are possible.

Machine learning is no longer only used to support the market’s major players. The advantages of ML are comparable for large and small businesses. Consider this: At one point, startups and small businesses were eagerly embracing such enormous novelties as SaaS and cloud services to manage their internal operations efficiently and securely. Due to its adaptability, artificial intelligence has risen to the top of the list of technologies. With it, you can streamline customer communications, automate workflow, and enhance your development strategy.

Knowing what machine learning is capable of and how it functions will help you decide whether to use it. But ML has its limitations, just like any other technology, and these cannot be ignored. Let’s examine machine learning’s limitations and how knowing them can help you steer clear of unintended system behavior and undesirable results.

Ability To Learn And Improve In Time

By using prior outcomes and experience, machine learning systems of particular software or programs develop their ability to make assumptions. This cycle never ends. The ability of algorithms to “understand” what events are likely to happen improves over time. The likelihood that the outputs produced by the system will be accurate increases as the system completes more learning cycles.

A few instances of how ML can help with creating prognoses and informing about impending changes include real-time market research, economic modeling, assessments of services and goods consumption, and more.

When Machine Learning Application Is Not Good?

ML should almost always not be used in the absence of labeled data and first-hand knowledge. For deep learning models, labeled data is almost always required. Data labeling is the process of organizing already “clean” data for machine learning by adding labels to it. It is not advised to use ML if there is a lack of sufficient high-quality labeled data.

Because ML requires more complex data than other technologies, designing mission-critical security systems is another situation in which AI should be avoided.

Is Ml Still Useful In Spite Of All Its Drawbacks?

There is no denying that AI has provided humanity with a number of exciting new opportunities. Some have, however, also come to believe that machine learning algorithms are capable of resolving every issue facing mankind.

In situations where a human would normally perform the task, machine learning systems perform at their best. It can do well if it isn’t asked to be creative, intuitive, or use common sense.

Algorithms learn well from explicit data, but they lack the human capacity for understanding the world and its workings. An ML system can be taught, for instance, what a cup looks like, but it is unable to comprehend that the cup contains coffee.

When people interact with AI, they experience these constraints, similar to common sense and intuition. For instance, when given logical, intuitive questions, chatbots and voice assistants frequently fail. Autonomous systems have blind spots and miss potentially crucial stimuli that an individual would notice right away.

The power of machine learning makes it possible for people to work more effectively and live better lives, but it cannot completely replace them because it is not yet capable of performing many tasks. ML has some benefits, but there are drawbacks as well.

The Bottom Line

There are restrictions that, at least temporarily, prevent that from happening, as I hope I have explained in this article. It is not possible to accept that both the benefits and difficulties presented by ML are unassailable. How complicated the ML models are and the issues they will be trained to address will always be determining factors.

Please leave a comment if you have any further queries.

Read More: What Is Machine Learning Model Training?