Here is a complete guide on python reinforcement learning to make you better understand RL.

The best and most typical application of RL is the training of pet dogs to retrieve a stick and return to their owner.

The dog will not receive a treat if it is unsuccessful in obtaining the stick or obtains the incorrect stick; otherwise, it will be rewarded with its delectable treats.

Reinforcement learning will be explained in this blog post, and a straightforward Python implementation will be provided.

Table of Contents

What Do You Mean by Reinforcement Learning?

Let’s quickly review machine learning before moving on to reinforcement learning, abbreviated as RL.

There is a lot of information available in some circumstances. Algorithms aren’t available, though, to teach machines how to reason in order to produce the desired result.

In these circumstances, machine learning can help. Machine learning is the technology that, given the inputs and the desired outputs, will come up with the logic or algorithm to predict the output for an unexpected or new input.

Related Post: Difference Between Deep Learning and Reinforcement Learning

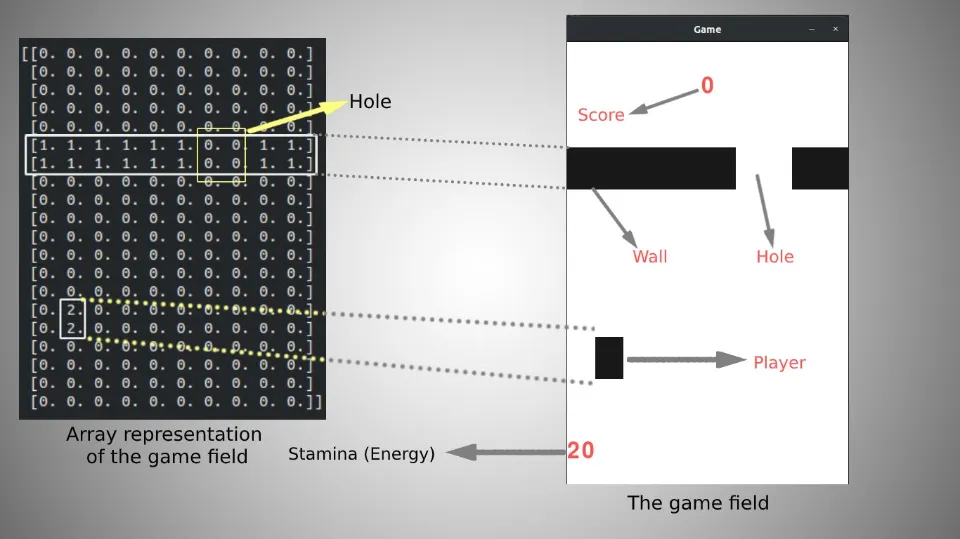

Basic Implementation of RL in Python

It’s time to get our hands dirty with some snippets!!!

We can visualize how RL systems operate internally with the help of the code below. However, this is only a straightforward implementation to grasp the RL concepts that we have so far discussed.

Install the random package and code on your local system or use Google Colab for implementation.

Logic will be applied to determine whether the action taken by the agent was correct or incorrect. But in this instance, let’s use the random package to pick one of the rewards at random.

For the implementation, we will use two classes, namely, MyEnvironment and MyAgent.In this example, consider a game that the agent must finish in at most twenty steps.

MyEnvironment:

This is the environment class that represents the agent’s environment. The class needs to have member functions that can retrieve the agent’s current observation or state, calculate reward and punishment points, and keep track of how many more steps the agent can complete before the game is over.

With the above understanding, let us define the environment class as follows:

#create Environment class

class MyEnvironment:

def __init__(self):

self.remaining_steps=20

def get_observation(self):

return [1.0,2.0,1.0]

def get_actions(self):

return [-1,1]

def check_is_done(self):

return self.remaining_steps==0

def action(self,int):

if self.check_is_done():

raise Exception("Game over")

self.remaining_steps-=1

return random.random()

__init__() is the default constructor which initializes the number of steps remaining to 20 before the agent starts playing the game.

The current agent state as expressed by the coordinates is returned by the get_observation() function. The coordinate values (1.0, 2.0, and 1.0) are also arbitrary; there is no restriction on the number of coordinates, but I have only considered 3. You can assume any number of coordinates and any value for each of the coordinates as you wish! These coordinate values essentially provide information about the environment. I did not employ any logic to obtain these coordinates due to the simplicity of the implementation.

When an agent performs an action, it should get a positive reward or a negative reward which I have set to +1 and -1 respectively. The list of these two potential rewards is returned by the get_actions() function.

The next function, check_is_done(), determines whether the agent still has any available steps. The agent shouldn’t move any further if this is false.

The action() function is used by the environment to return some reward points to the agent based on its action which the function receives as an integer parameter. The function first determines whether the game is finished by calling the previous function, check_is_done(). If the game is finished, it raises an exception and ends the game; otherwise, it reduces the number of steps still available. As payment for the agent’s efforts, the random package is used in this case to return a random number.

Once more, simplicity has come at the expense of logic. There will be some logic to compute this reward in real-time applications.

myAgent:

Compared to the environment class, the agent class is less complex. The agent performs an action while collecting rewards from its environment. We will require a member function and a data member for this.

With this knowledge, the agent class can be defined as follows:

class myAgent:

def __init__(self):

self.total_rewards=0.0

def step(self,ob:MyEnvironment):

curr_obs=ob.get_observation()

print(curr_obs)

curr_action=ob.get_actions()

print(curr_action)

curr_reward=ob.action(random.choice(curr_action))

self.total_rewards+=curr_reward

print("Total rewards so far= %.3f "%self.total_rewards)

The total reward points are initialized to 0.0 by the __init__() default constructor because the agent doesn’t receive any rewards at the start of the game.

The corresponding environment, or the environment the agent is exploring, is a parameter of the step() member function. For this reason, the environment class object is passed as a parameter to this function.

By using the environment class’s object to call its get_observation() function, the function first determines the agent’s current state. Then, depending on the myEnvironment class’s explanation, it receives the potential rewards (-1 or 1) for the agent’s actions. The random.choice() function is then used to select either 1 or -1 at random, and the program then declares that this value represents the agent’s current course of action. However, this value is not truly random. It will be calculated with the aid of some logic). The current reward can be obtained by passing this value to the myEnvironment class’ action function. The returned reward is added to the total rewards collected by the agent so far.

Note that the reward here can be positive(in case of 1) or negative(in case of -1) and will be added to the total rewards of the agent.

Finally, create objects of the above classes and execute as follows:

if __name__=='__main__':

obj=MyEnvironment()

agent=myAgent()

step_number=0

while not obj.check_is_done():

step_number+=1

print("Step-",step_number)

agent.step(obj)

print("Total reward is %.3f "%agent.total_rewards)

In Python, a class is simply declared by calling class_name() to produce an object. There are no parameters __init__ constructors so it is that simple in our case.

obj is an object of myEnvironment class and agent is an object of the myAgent class. The agent performs an action by calling the step function of the myAgent class and passing an object that refers to the agent’s environment, obj, up until the game is still in progress, which is determined by the while loop.

Important Terminologies in RL

In this article, we will discuss the following terms:

- Agent

- Environment

- State

- Action

- Reward

Agent:

As discussed in the previous section, by referring to a dog as an agent, its aim is to maximize the reward. It picks up new skills through trial and error, acting in its environment in hopes of earning rewards or not.

To determine what must be done at a specific timestep, it implements some policy.

For instance, a self-driving car in training will be rewarded favorably if it avoids hitting any obstacles in its path. It will receive a bad reward or a punishment after it dashes an obstacle.

Environment:

In the previous example, the track along which the car must ride is its environment. In the dog’s example, the environment is the ground on which it runs in search of the stick.

State:

“Where is the agent now?”

The state is provided in the answer to the earlier question. It is a snapshot of the environment in which the agent is at that precise moment.

Action:

It is defined as the move made by the agent, based on its environment, at a particular point in time.

For instance, the vehicle might veer to the left or right. This is the action the car takes, let’s say it turns left.

Reward:

As discussed so far, an agent bags the reward based on its action. The environment gives a positive or negative reward to the agent depending on what action it took.

Read More: DQN Reinforcement Learning

Conclusion on Python Reinforcement Learning

It is very complex in reality because there cannot be any rewinds or what-if-s(we cannot stop AI and ask it to show something else to the user when it is running in real-time!).

Also, real-time performance involves millions of users whom the system must respond to in just a few milliseconds! For things operating in the real world, explorations lead to many consequences and there is no undo option available.